I published a post on Elm Silent Teacher, an educational game designed to help you learn the Elm programming language through interactive exercises.

https://michaelrätzel.com/blog/elm-silent-teacher-an-interactive-way-to-learn-elm

As I assist beginners in getting started with Elm, I often wonder how we can make learning this programming language easier. This way, I also discovered the ‘Silent Teacher’ shared by @Janiczek at https://discourse.elm-lang.org/t/silent-teacher-a-game-to-teach-basics-of-programming/1490

These compact, interactive learning experiences are an excellent resource for students, so I expanded on the approach and developed it further.

The core loop - learning through bite-sized exercises

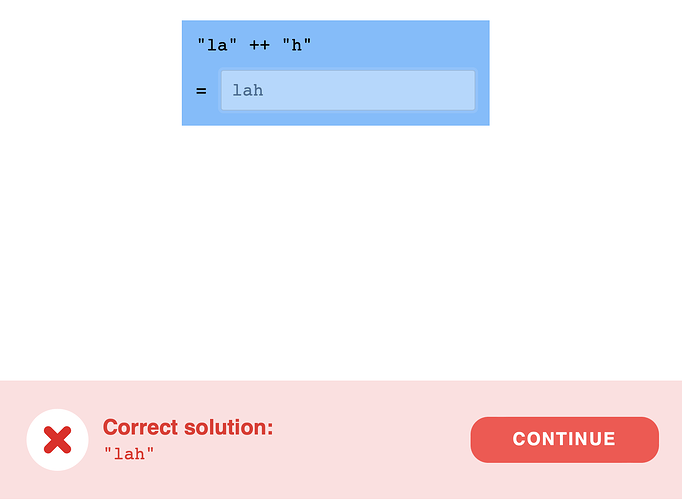

In the core interaction loop, the app presents an Elm expression and prompts us to evaluate it.

Of course, evaluating an expression in our brain requires some knowledge of the programming language. So we enter our best guess and initially often get it wrong.

After submitting an answer, the system checks it for correctness. If the answer is incorrect, the Silent Teacher points out the correct answer. Once we are ready for the next challenge, we can continue with a new, similar exercise on the same topic.

And as we get more exercises right, the system shows more advanced challenges. The user gradually learns about programming language elements and functions from the core libraries through many small repetitions.

Elm Silent Teacher - evolved

Compared to the earlier implementation, I adopted a different approach for encoding exercises, simplifying the authoring process. Course authors no longer need to provide a function that computes the correct answer. An interpreter now takes care of this part automatically, running the evaluation on the user’s device in the web browser.

Since the interpreter is readily available, users can also experiment with additional expressions. After submitting their answer, they can access a REPL-like sandbox initialized with the exercise’s expression. In this exploration mode, users can modify the expression and observe how the evaluation changes accordingly.

-

To try an example course, visit https://silent-teacher.netlify.app/

-

Elm Silent Teacher is open-source; you can find the code at https://github.com/elm-time/elm-time/tree/main/implement/elm-time/ElmTime/learn-elm

-

You can modify the exercises and customize the learning path in the

Exercisemodule

If you took the trip, let me know how it went. I’d love to hear your thoughts.